Understanding HIVE

Hive is a data warehousing infrastructure based on Hadoop. Hadoop provides massive scale out and fault tolerance capabilities for data storage and processing (using the map-reduce programming paradigm) on commodity hardware. Hive is designed to enable easy data summarization, ad-hoc querying and analysis of large volumes of data. It provides a simple query language called Hive QL, which is based on SQL and which enables users familiar with SQL to do ad-hoc querying, summarization and data analysis easily.

It is also to be noted that

Hive is a data warehousing infrastructure based on Hadoop. Hadoop provides massive scale out and fault tolerance capabilities for data storage and processing (using the map-reduce programming paradigm) on commodity hardware. Hive is designed to enable easy data summarization, ad-hoc querying and analysis of large volumes of data. It provides a simple query language called Hive QL, which is based on SQL and which enables users familiar with SQL to do ad-hoc querying, summarization and data analysis easily.

It is also to be noted that

- Hive is not designed for online transaction processing and does not offer real-time queries and row level updates. It is best used for batch jobs over large sets of immutable data (like web logs).

- Hive is not designed for online transaction processing and does not offer real-time queries and row level updates. It is best used for batch jobs over large sets of immutable data (like web logs).

We will be using the same data that we used in our hive tutorial. Namely, files batting.csvand master.csv.

Data Source:

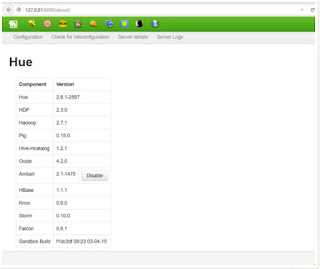

Accessing Hue

You can access HUE from the entering the address 127.0.0.1:8000

Login Id : Hue

Pwd : 1111

Uploading Data

Data is uploaded into the directory user/hue from the HDFS file system. The steps to upload the files into this directory are available on my previous blogs.

Once the files are uploaded they should look like this

Step 1 – Load input file:

We need to unzip it into a directory.We will be uploading files from the data-set in “file browser” like below

In Hue there is a button called “Hive” and inside Hive there are query options like “Query Editor”, “My Queries” and “Tables” etc.

On left there is a “query editor”. A query may span multiple lines, there are buttons to Execute the query, Explain the query, Save the query with a name and to open a new window for another query.

Step 2 – Create empty table and load data in Hive

In “Table” we need to select “Create a new table from a file”, which will lead us to the “file browser”, where we will select “batting.csv” file and we will name the new table as “temp_batting

Data has been loaded, the file (batting.csv) will be deleted by HIVE.

We execute the following command, and this will show us the first 100 rows from

the table.

Step 3 – Creating a batting table and transfer data from the temporary table to batting table

Step 4 – Create a query to show the highest score per year

We will create a query to show the highest score per year by using “group by”.

SELECT year, max(runs) FROM batting GROUP BY year;

Step 5- Get final result

We will execute final query which will show the player who scored the maximum runs in a year.

Hope this tutorial helps you in running the Hive Query Language for calculating baseball scores.

Thank you !!!